I tried the vision of Copilot and can change how you use Windows forever

Adding an eye to artificial intelligence is always a difficult thing. Would you like him to see everything you always do? Absolutely no, but I think most of us, when you need a AI visual assistance can be quite useful. Microsoft’s new Copilot Vision can be one of the most promising applications of AI -based visual skills I have just seen.

Microsoft introduced the Copilot Vision update for Windows app and mobile applications (you can direct your camera to things and vision can define them for you).

To provide updates between memory, search, personalization and vision features, both Homegrown (Microsoft AI or MAI) and Openai GPT are all copying except for a brain transplant.

Now that I see the Copilot Vision while working, I can say that even if it comes in two stages, I can say that the band is one of the most exciting and important updates.

In the version you can currently access for your supportive Windows desktop application, you can see the applications you run on the Copilot Vision desktop. When you open the Copilot – you can now select the new glasses icon – by selecting the icon or pressing the Kopilot key on your keyboard.

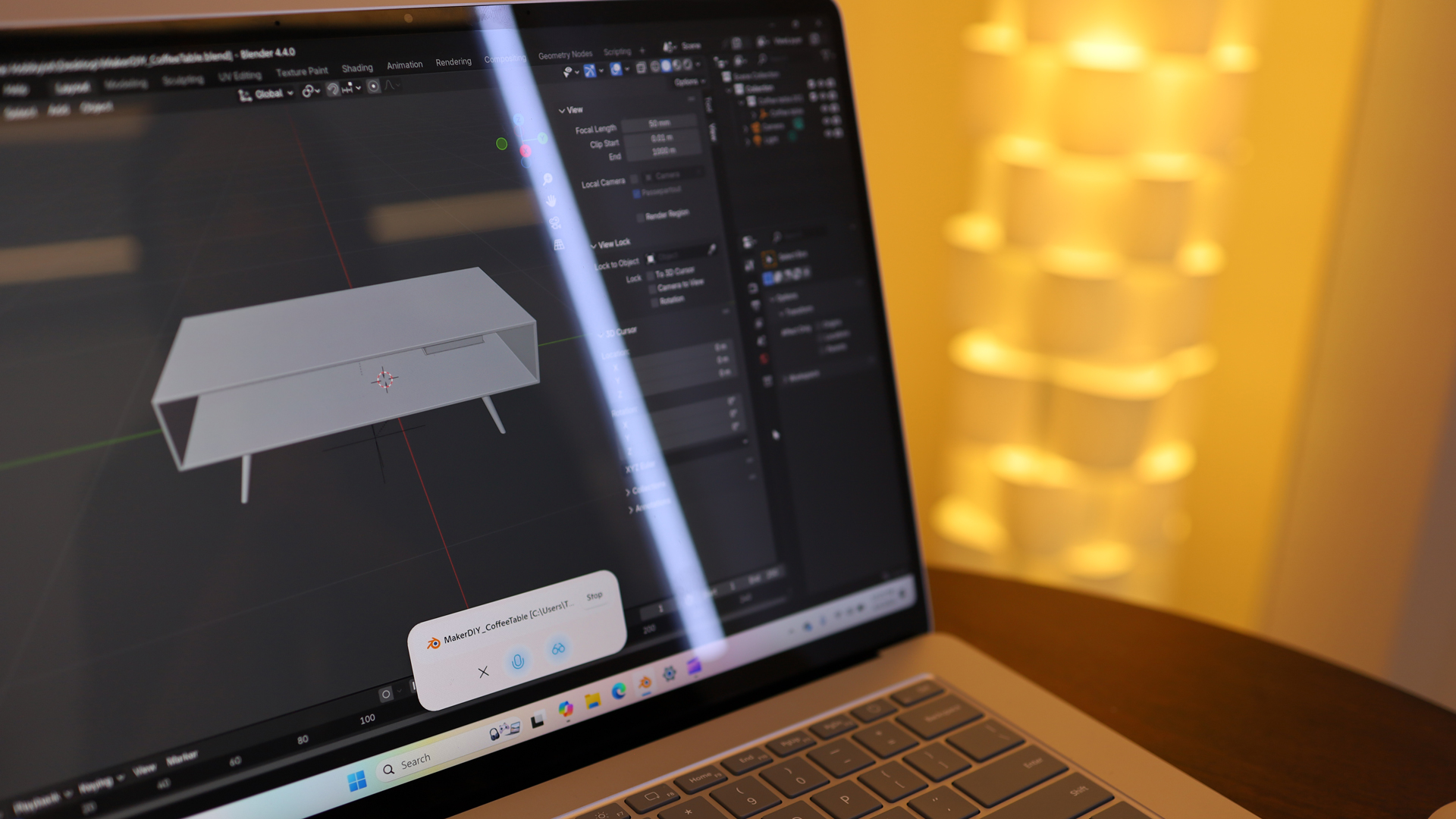

This allows you to see a list of open applications; In our case, there were two running: Blender 3D and Clipchamp. This means that Copilot does not automatically monitor the existing applications working in the windows.

We chose the Blender 3D and from that moment on something in my windows changed. I realized that Copilot can really see which application you are running, and instead of predicting your intention, it answers according to the application and even the project you are working on.

A 3D coffee table project was open and using our voice, we asked us that table design would be made more traditional. Our request did not contain almost no details about the application or the project, but Copilot’s answer was completely context in a beautiful baritone.

Then we made the transition and asked how to make additional explanations in practice. Copilot started to answer, but we were interrupted and we asked where to find the icon to add additional explanations. Copilot was quickly set and told us how to find us immediately.

This can be very useful, because you no longer break your flow to jump out to overcome which application or project or project. Copilot Vision sees and knows.

Still, let me tell you what will happen.

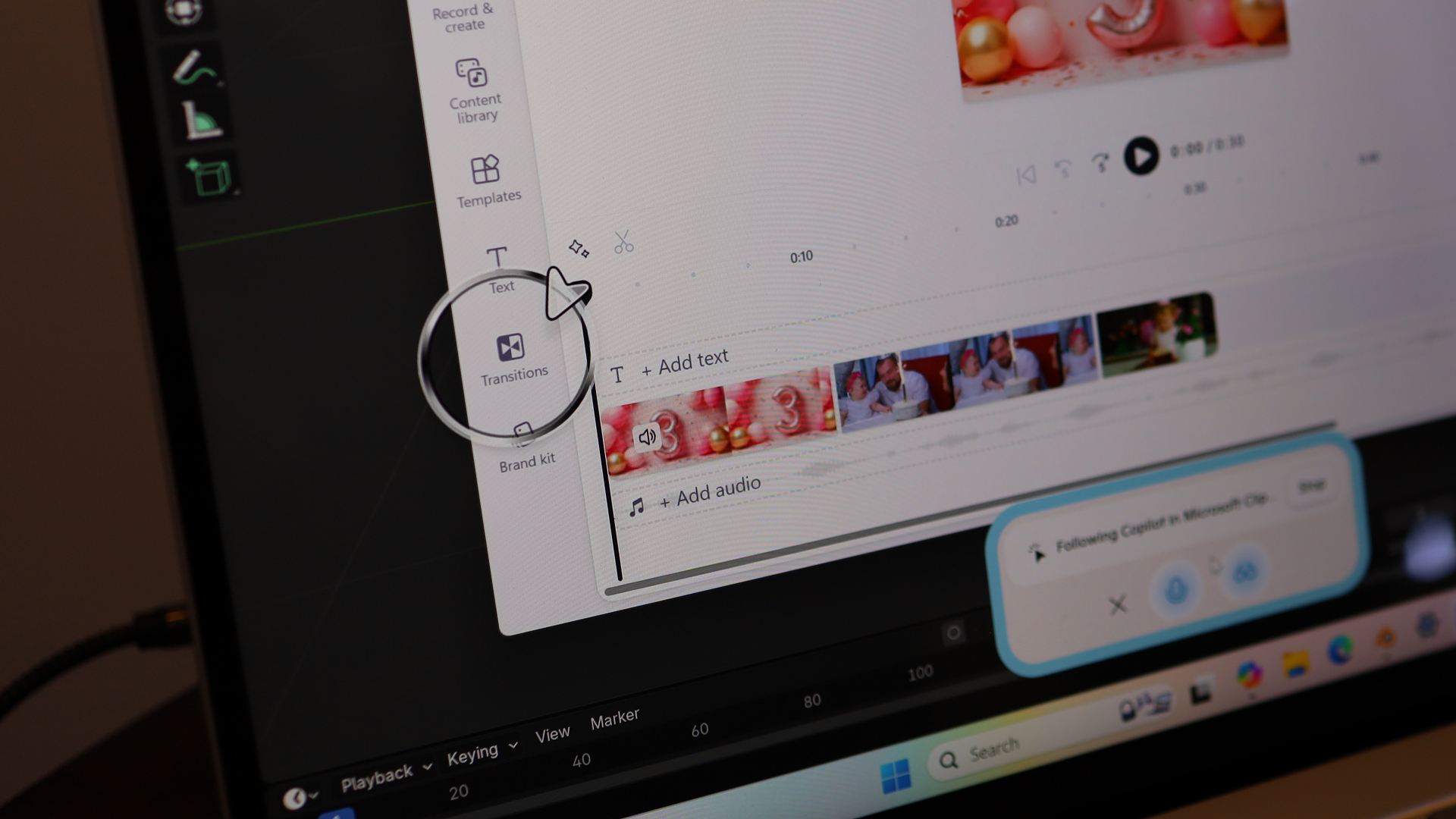

We watched the same steps to open Copilot and access the Vision component, but this time we pointed to our Open Clipchamp project.

We asked Copilot how to make our video transitions more smoothly. Instead of a text request explaining what to do, Copilot Vision showed us where to find the necessary tool in the application.

On the screen, a giant arrow appeared (in an animated apartment), and appeared by pointing to the transition tool that we suggested to use it to explain the necessary steps. We ran this demo several times and still did not always work because of its advanced nature.

Nevertheless, he pointed to a potentially exciting change in how to work with the applications in Windows.

In addition, we have seen a demo video in the Photoshop application that shows the digging Copilot Vision in the Photoshop application to find the right tools. This is my friends, steroids.

In an open application, imagine the future of text requests or your voice to understand how to perform tasks, and the Copilot Vision takes digitally and takes your hand to you. There is no sign that you will do actions at the level of application on your behalf, but this can be an incredible visual assistant.

The good news is that at least the Kopilot vision, which knows which application and the project you are working on, is currently present. The bad news is that the vision of the Copilot I really want does not have a definite time schedule. But I have to assume that this won’t take long. After all, we saw it alive.